AI Agents Must Follow the Law

Listen to O’Keefe discuss “Law-Following AIs” on Lawfare Daily.

Artificial intelligence (AI) agents are the next big frontier in general-purpose AI. By now, most people are familiar with generative AI systems: systems like OpenAI’s GPT-4o that specialize in outputting media (text, code, images, audio, video) that humans consume or use. But the goal of frontier AI companies has never been to simply automate content generation—it has been to automate most of the economically valuable work that humans currently do, at least in the digital realm. Thus, AI companies are trying to turn their generative AI systems into “computer-using agents”: AI systems that “can do anything a human can do in front of a computer.”

Recent demos from OpenAI (where, for disclosure, one of the authors previously worked) and Anthropic illustrate progress toward this goal. A video from OpenAI’s “Operator” agent demonstration, for example, shows its agent looking up the ingredients for a specific recipe and adding those ingredients to an Instacart order via a website. Given the imperfect reliability of these systems, the user still has to log in to their account and confirm payment information, but further technological improvements could enable reliable end-to-end automation of computer-based transactions like this, as well as the automation of sophisticated computer-based tasks that would take a person days or weeks to complete.

As AI agents become more competent, the incentives to deploy them throughout society will grow stronger in turn. This is likely to be beneficial or benign in many—perhaps most—cases. But widespread deployment of AI agents could transform the structure of human society, to the point where the effective workforce in many crucial sectors—including the government—becomes split between human employees and AI agents. For example, AI agents acting as government investigators might apply for a search warrant, then collect digital evidence pursuant to that warrant through digital communications, or perhaps even by directly hacking into the target’s devices. Those investigators might then pass along the evidence to AI agents acting as prosecutors, who would then prepare the complaint and subsequent pleadings, likely relying on human lawyers to approve and formally submit their written work to the court.

Before permitting such a paradigm shift in the structure of government administration, it is worth contemplating what safeguards should be in place. In a forthcoming paper, we make a simple argument: AI agents should be designed to follow the law. As a condition of their deployment in high-stakes government work, they should be trained to refuse to take action that violate a core set of legal prohibitions—including constitutional law and criminal law—that protect basic human interests, legal rights, and political institutions. We call such AI agents “Law-Following AIs,” or LFAIs. Our paper explores the importance of LFAI in both the public and private sectors, but our focus here is on AI agents employed by the government, where lawlessness poses particularly acute risks.

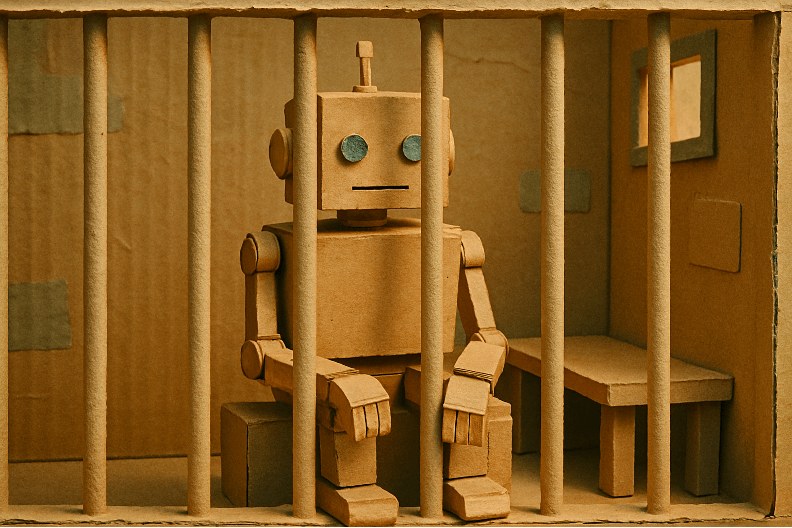

The Risks of “AI Henchmen” in Government

We contrast Law-Following AIs with “AI henchmen”: AI agents that are trained to be “loyal” to their human principals, but without innate law-following constraints. Because of their unfettered loyalty, AI henchmen would be willing to break the law strategically when it would benefit their principal. Since AI agents have no innate fear of punishment, they would be unconstrained in breaking the law in the way that a human henchman would—and possibly more competent, too.

To imagine the risks of AI henchmen in government, consider the famous hypothetical from Justice Sonia Sotomayor’s dissent in Trump v. United States, where a future president orders Seal Team Six to assassinate a political rival. As commentators have written in Lawfare, regardless of whether the president would be immune for issuing such an order, members of the military would have a legal duty to refuse. Jack Goldsmith noted that the “threat of criminal sanction for subordinates [i]s a very powerful check on executive branch officials.”

But AI henchmen cannot be meaningfully punished. Indeed, if they were trained to be loyal, they might “fear” disloyalty even more than all the censure the legal system can muster. So if we imagine a version of Seal Team Six composed of AI agents, they might be willing to carry out this order when (hopefully) no human soldier ever would.

Risks from AI henchmen in government are not limited to such dramatic hypotheticals. For example, AI henchmen employed by the IRS could discriminate against political opponents in choosing whose taxes to audit—without leaving any record of a conscious decision to do so that could form the basis of a First Amendment challenge. Or they could simply surveil citizens in violation of the Fourth Amendment, such as by hacking into their personal devices. And so on with most imaginable governmental violations of the Constitution and laws of the United States, large and small.

Protecting citizens from lawless government agents is a distinguishing aim of the Anglo-American political tradition and our constitutional design. As AI agents assume roles that have traditionally been held by human agents, fidelity to that tradition demands that America evolves its means for binding these government agents to the law.

Existing Approaches to AI Agents and the Law

There is a large and growing literature about how AI systems should be regulated. Without detracting from their utility, LFAI aims to fill various gaps in these proposals.

Early discussions of AI agents and the law tended to assume that AI systems could only follow the law insofar as “the law” was reducible to formally specified rules of action that could be written in the computer code that dictated the system’s actions. As Mark A. Lemley and Bryan Casey wrote, while “[a] court can order a robot, say, not to take race into account in processing an algorithm,” effectuating that order would require “translat[ing] that injunction, written in legalese, into code the robot can understand.”

These worries made sense when AI systems were symbolic in nature, operating off of human-specified rules formalized in computer code. But today’s frontier AI systems leverage deep learning to learn the difficult-to-formalize nuances of human language. For all of the flaws of existing AI systems, inability to understand fuzzy concepts embedded in human language (including legalese) is not one of them.

A separate strand of scholarship tends to propose principles for holding a human—such as the developer or user of an AI system—liable for harms that those systems cause. Theories that could support civil liability for developers and deployers could range from ordinary negligence, to products liability, to vicarious liability, to strict liability as an

Tort liability and other ex post sanctions (such as fines) can be powerful tools for incentivizing people to develop and use AI with due care for the rights of others. But they will not be enough, especially for AI agents controlled by the government. The most obvious reason, perhaps, is that a wide range of powerful immunity doctrines protect the government and its agents from liability even for lawless action. Some of these doctrines may have a shaky legal footing, but for now (at least) they are robustly recognized by judicial precedent. Others are, for better or worse, inscribed into the constitutional structure itself.

More fundamentally, it is troubling to think that government employees should treat the law as merely a set of incentives. Government employees should refuse to obey illegal orders in the first instance, not merely accept responsibility after the fact. Consequently, there are protections in place for refusing illegal orders. The priority of obeying the law, rather than treating legal sanctions as a “price” to be paid, is further reflected in the fact that injunctive relief is the preferred remedy for constitutional violations. The president has a duty to “faithfully execute[]” our laws and swears an oath to faithfully execute his office. All of this affirms what common sense demands: Public servants must, to a significant extent, refrain from breaking the law ex ante, not merely accept ex post consequences for lawbreaking.

Coupling Legal Innovation With AI Alignment

Our paper is certainly not the first to propose that “autonomous agents will have to be built to follow human norms and laws.” But our discussion aims to fill some important gaps in the existing discourse.

The first gap is simply that, as the law stands, it is unclear how an AI could violate the law. The law, as it exists today, imposes duties on persons. AI agents are not persons, and we do not argue that they should be. So to say “AIs should follow the law” is, at present, a bit like saying “cows should follow the law” or “rocks should follow the law”: It’s an empty statement because there are at present no applicable laws for them to follow.

We propose that the legal system solve this problem by making AI agents “legal actors”: entities that bear legal duties but do not necessarily have any legal rights (as a legal person would). This makes sense for AI agents (but not cows or rocks), given that they can read natural-language laws and reason about whether a contemplated action would violate those laws. This move also lays the analytical foundation for imposing law-following requirements on AI agents: Once we treat AI agents as legal actors, we can then specify the set of laws they should obey and analyze whether the agents are properly obedient.

A separate problem that befalls some of the existing literature is failure to discuss the “alignment problem.” Simply put, the alignment problem is a theoretical argument, backed by empirical observation, that it is difficult to design AI systems that reliably obey any particular set of natural-language constraints. Although often discussed in other contexts, the alignment problem is of utmost relevance to the design of law-following AI: Ensuring that AI agents follow the law (even when they are told or incentivized not to) requires aligning them to law. The alignment literature also highlights some relevant problems that LFAI would have to solve: How can we be sure that AI agents will be as law-following when they’re being carefully watched as when they are given free rein? How can we be sure that there is not a “backdoor” in an AI system that would enable a user to override law-following constraints when, for example, the user enters a secret phrase? Anyone who wants to ensure that AI agents follow the law must contend with these and other problems identified in the AI alignment literature.

In our view, the alignment community should make aligning AI systems with the law one of its top priorities. We agree with the consensus in the alignment community that AI agents should be “intent-aligned”: They should generally do what the user tells them to do, or what’s in the user’s best interests. But most experts also agree that this will likely be insufficient to protect society’s interests, because some users will want to do bad things. So some additional set of guardrails is needed to prevent AI agents from carrying out undesirable actions directed by their principals.

Traditionally, the alignment community has proposed that “value-alignment”—side-constraints on AI grounded in extralegal ethical principles—fill this function. Some degree of value-alignment is probably desirable (since nobody thinks the law is a complete guide to moral behavior), but leaning too heavily on value-alignment can have serious downsides. Incidents like Google Gemini’s infamous depiction of racially diverse Nazi soldiers, combined with empirical evidence showing that leading AI applications have left-leaning political biases, have caused significant backlash over the concept of value-alignment.

But some source of constraints, beyond users’ intentions, remains necessary. The law, as produced by our democratic system of governance and interpreted by our courts, provides a legitimate and legible starting point for alignment beyond intent-alignment and avoids some of the political downsides of extralegal value-alignment. It is also consistent with the basic principles of principal-agent doctrine, which holds that agents only have a duty to obey lawful orders. Fortunately, it is also consonant with trends at frontier AI companies, which have begun to instruct their AI systems to obey the law, even when instructed or incentivized not to.

Implementing Law-Following AI

This, then, is our vision of LFAI: AI agents that are “loyal” to their principals (indeed, perhaps even more loyal than human agents), but that also refuse to break the law in their service. How, exactly, can we promote the widespread adoption of LFAIs?

For the purposes of this project, we do not have strong opinions on how AI agents controlled by private principals should be regulated. It seems obvious that it is preferable that such agents be law-following, but we do not necessarily want to make the stronger claim that private actors should be prohibited from employing non-law-following AI agents. Even if private actors use AI henchmen to cause harm, ex post tools like law enforcement and civil suits can likely provide a significant guardrail.

What we do feel strongly about, however, is the proposition that AI agents exercising the government’s most coercive domestic powers—such as surveillance, investigations, prosecutions, law enforcement, and incarceration—must be law-following. A government staffed by human henchmen, perfectly obedient to their principal, including their principal’s unlawful orders, would be intolerable; we are a government of laws, not of men. A government staffed by AI henchmen, who do not face criminal liability or reputational damage, would be even more intolerable.

How, then, might this be implemented? We can offer only a brief sketch here, but our vision is something like the following. To procure and operate AI agents, the government will need congressional funding. Congress could attach, as a condition of such funding, a requirement that such agents be law-following, with further specification of what “law-following” means and how it can be demonstrated. The government would thus need to prove that AI agents under its control would be law-following, based on a specified evaluation method, before it could deploy them.

The Law-Following AI Research Agenda

We have articulated our basic case for and vision of LFAI. But readers will doubtless have a number of questions. We do too. Our goal with this paper is to open, not conclude, a conversation of how the law should respond to the rise of AI agents. Accordingly, our article begins to articulate a research agenda that, if followed, should facilitate the drafting of laws mandating that government AI agents be law-following. Some of the most important questions, from our perspective, include:

Which laws must LFAIs follow? We do not want to claim that LFAIs must follow literally every law to which similarly situated humans would be subject. This would probably be over-burdensome and impracticable. It may make sense, then, to identify a set of laws that can safeguard the U.S. legal order and citizens’ most basic interests. As society and AI technology evolve, we will also have to make new laws to bind LFAIs.

How rigorously must LFAIs follow the law, and how should they handle legal uncertainty? Since it is not always clear what the law commands, LFAIs will have to operate under legal uncertainty. Furthermore, perfect obedience to many laws is not necessary and is likely suboptimal. So we will need to specify some standard for how rigorously an LFAI must obey laws ex ante.

How should “AI agent” be defined? AI agency is a continuum, not a binary, but we still need to specify which agentic AI systems must be law-following. We have gestured toward a definition of AI agent above, but it is not designed to be a proper legal definition.

When should government AI agents be required to be LFAIs? The answer may not be “always,” because we probably want to give the government leeway to experiment with new technologies without too many ex ante hurdles. And certain government functions (e.g., designing informational materials for national parks) likely do not raise the core threats to life and liberty that LFAI aims to avoid.

How should we apply laws with mental state elements to AI agents? How can we tell, for example, if an AI agent “intended” some harm?

Would requiring AI agents controlled by the executive branch to be LFAIs impermissibly intrude on the president’s authority to interpret the law for the executive branch? We think there are good arguments against this, but given the current Supreme Court’s solicitousness for broad claims of executive power, we would like the argument for the constitutionality of LFAI mandates to be airtight.

A longer list of possible research questions is enumerated in Part VI of our paper.

***

The U.S. may be about to enter an age in which most government power is exercised through AI agents. Many leading developers of these agents believe that sufficiently powerful AI agents will arrive within a few years. Even if it takes much longer than that, re-imagining our social and economic institutions to withstand the resulting shock is an urgent task. So, too, with our legal institutions. To preserve the American vision of a nation in which the law is king, we will need to evolve both the law itself and our technology. The American legal system needs to recognize a new type of actor that can heed and comply with its commands. And as they take over critical functions in public governance, our most advanced AI technologies need to rigorously comply with the same standards we expect of human officials: They should obey the law.