Decoding the Defense Department’s Updated Directive on Autonomous Weapons

The update to the 2012 directive provides clarity and establishes transparent governance and policy, rather than making substantial changes.

In 2012, the U.S. Department of Defense introduced Directive 3000.09, “Autonomy in Weapon Systems.” At the time, the directive became one of the first initiatives of its kind as militaries began considering the impact of advances in autonomous technologies and artificial intelligence (AI)—a concept that, prior to the directive, belonged largely to science fiction. As a result, it sparked widespread interest and discourse among the public, civil society, and nongovernmental organizations.

However, this attention quickly devolved into confusion and misinterpretation.

Human Rights Watch, for example, mischaracterized the original directive as “the world’s first moratorium on lethal fully autonomous weapons.” In reality, the policy did not place any restrictions on development nor use, and the “lifespan” was simply a feature of all Defense Department directives, which requires updates, cancellations, or renewals every 10 years. This led some observers to believe that the department was simply stalling its pursuit of so-called killer robots. In part, this misinterpretation contributed to the birth of Stop Killer Robots—an international coalition calling for a total ban on fully autonomous weapons.

Ironically, within the department itself, there was parallel but oppositely directed confusion about the directive. The policy outlined a supplemental review process for weapons systems that reached a certain level of autonomy, but the scope and purpose of this review were unclear. Some leaders within the department interpreted this more conservatively as a de-facto bureaucratic barricade to developing autonomous systems.

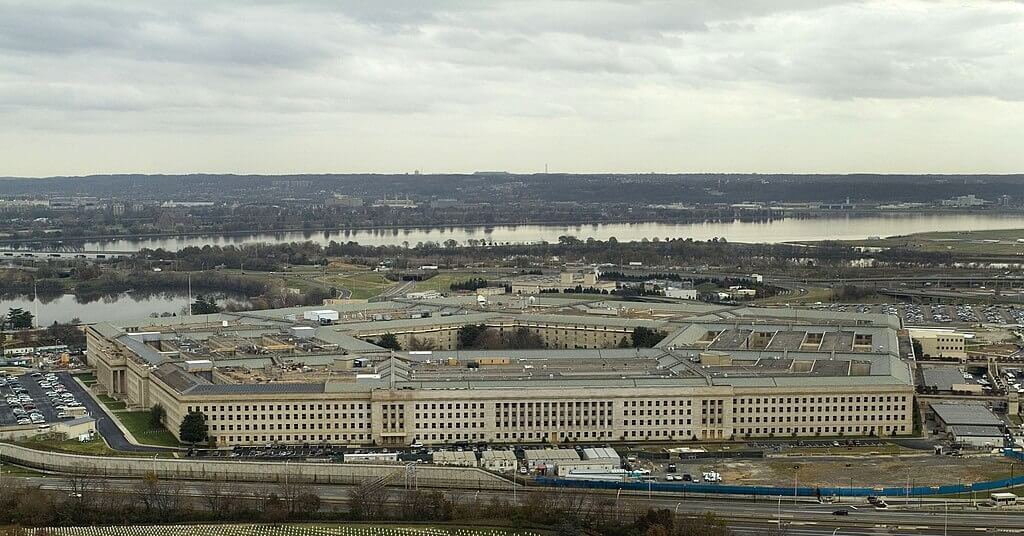

For the past decade, Directive 3000.09 has been the primary U.S.-specific policy regarding the use of autonomous technologies in weapons systems. However, its lack of clarity has cast a shadow over the development of emerging technologies, including AI, that have any degree of autonomy on the battlefield. When the 10-year term ended at the end of 2022, the Pentagon decided to update the directive. The focus of the update is to provide clarity and establish transparent governance and policy, rather than make substantial changes.

A Decade in the Making: Keeping Pace With Rapidly Developing Technologies

Though substantively not that different from the original, the update is critical in bringing U.S. policy up to speed with rapidly developing technologies. The new and improved Defense Department Directive 3000.09 paints a clearer picture of which (semi-) autonomous systems require a greater degree of bureaucratic scrutiny. It represents an important step forward in balancing the speed of technology adoption with safety and ethical considerations, especially given the way the growing integration of AI could make autonomous weapons systems more likely in the future.

In the decade since it was first devised, the field of autonomous technologies has grown exponentially, with new advances and capabilities emerging nearly weekly. For example, just last month, OpenAI released its highly capable large language model, ChatGPT, which can provide suggestions for a 10-year-old’s birthday party, write code, generate themed poems, and even produce crochet patterns, while also recalling previous interactions in a “conversation.”

As these technologies have developed and progressed, however, the use of terms such as AI, autonomy, machine learning, and natural language processing has become increasingly confusing. In the past, these terms were often used interchangeably, but as the field has grown, it has become clear that they are distinct and separate areas of research and development. A major example of this confusion can be seen in the debate over lethal autonomous weapons systems (LAWS), the term used in United Nations dialogues to refer to autonomous weapons systems. In the past, the discussion on the use of AI in weapons systems often conflated AI with autonomy, making it challenging to develop effective AI policy separate from its use in specific weapons systems. Moreover, most discussions have focused on outright bans—which risk becoming ineffectual by missing the nuance and differences between relevant technologies, therefore failing to capture the most common uses of autonomous systems in military contexts and providing loopholes for states wishing to pursue development anyway.

The increasing use of AI on the battlefield in the Russia-Ukraine war also makes the update to the Defense Department’s directive rather timely. For example, AI is being used for purposes such as improving the targeting of artillery systems, deepfake propaganda, real-time language translation and categorization, and facial recognition of soldiers killed in action. Similarly, there is an almost-unprecedented reliance on remotely piloted capabilities, uncrewed vehicles, and loitering munitions, with ever-increasing degrees of autonomy (AI enabled or not). These sorts of technologies are further blurring the lines between AI, autonomy, and military systems—and are becoming commonplace on the battlefield and in state arsenals.

As of 2019, according to a Congressional Research Service report, the United States is not currently developing (semi-) autonomous weapons systems, and “no weapon system is known to have gone through the senior-level review process to date.” There is no evidence that this has changed, but it could in the next few years. With recent developments in both technological capabilities and geopolitical concerns, there are more questions than ever about the potential for autonomous weapons systems, what might constitute one, and when they might be appropriate to use.

The Need for Clarity in a Rapidly Evolving Landscape

Since the establishment of Directive 3000.09, there has been a widespread misperception—both inside and outside the Defense Department—that the directive bans the development of certain kinds of weapons. The policy does not prohibit or allow for the development of any specific type of system but simply outlines when a system—one with a certain elevated degree of autonomy—needs to undergo an extra level of scrutiny and formal review (in addition to the regular bureaucratic hoops a system has to jump through) before it can be developed.

The update also explicitly accounts for more realistic applications of the technology. For example, there are new direct references to AI, and a clear distinction between AI and autonomy—something that was missing in the 2012 version. The directive describes what additional policies (such as the AI ethical principles) and guidance (such as testing and evaluations of the autonomy algorithms’ reprogrammability) apply to AI-enabled autonomous systems. This was designed to account for the “‘dramatic, expanded vision’ for the role of AI in future military operations.” Furthermore, the update explicitly requires preexisting systems to undergo this additional review process if any modifications or updates to the system are made that add autonomous functionality—even if the original, underlying system has already been in use for years. In this way, it more appropriately accounts for the cyclical nature of technology development, allowing for the evolution of these capabilities without stifling innovation or risking them outgrowing regulation.

Critically, the refresh acknowledges the broader organizational shifts that the Defense Department has been undergoing in recent months to streamline AI, autonomy, and other emerging technology efforts within the department to “make a drastic move from a hardware-centric to a software-centric enterprise.” This includes the creation of the Responsible AI Strategy and Implementation Pathway and the reorganization of the Chief Digital and Artificial Intelligence Office.

Finally, the directive also establishes the Autonomous Weapon Systems Working Group. The body is designed not just to provide advice on a specific system under review but also to help facilitate that process in general, so that senior advisers have the evidence they need to determine whether or not they believe a system can be developed safely, following established ethical and normative principles. The hope is that the working group, along with the more thorough outlining of definitions and processes (complete with flowcharts) in the directive, will help to demystify some of the internal processes. This is increasingly important given some regular worries about the Defense Department lacking sufficient AI and STEM literacy, talent, and training.

Looking Toward the Future: Ensuring Responsible Governance and Policy

Overall, the new directive on autonomous weapons aims to bring much-needed clarity and precision to the complex and ever-evolving landscape of AI and autonomy. The directive addresses confusion both internally within the Defense Department and externally, tackling the various challenges posed by the definitional, categorization, and transparency issues surrounding these capabilities. It reinforces recent Defense Department efforts to overcome these challenges and provides a clear and comprehensive framework for the responsible and ethical use of AI and autonomy in military operations. With the rapid advancements in autonomous technology, it is crucial for future governance efforts to differentiate and define these technologies and their applications in order to establish effective policies and regulations. The revision of Directive 3000.09 represents a significant step in this direction, providing a clear and comprehensive framework for how the U.S. military will design and use autonomous weapons in future conflicts. By setting the record straight on the realities and misperceptions surrounding these technologies, the department is demonstrating its commitment to ensuring the responsible and ethical use of AI and autonomy in military operations.